Project Portfolio

School and Personal Projects

School Status

I completed my masters degree in Electrical and Computer Engineering at UCLA with a specialization in circuits and embedded systems.

Personal Projects

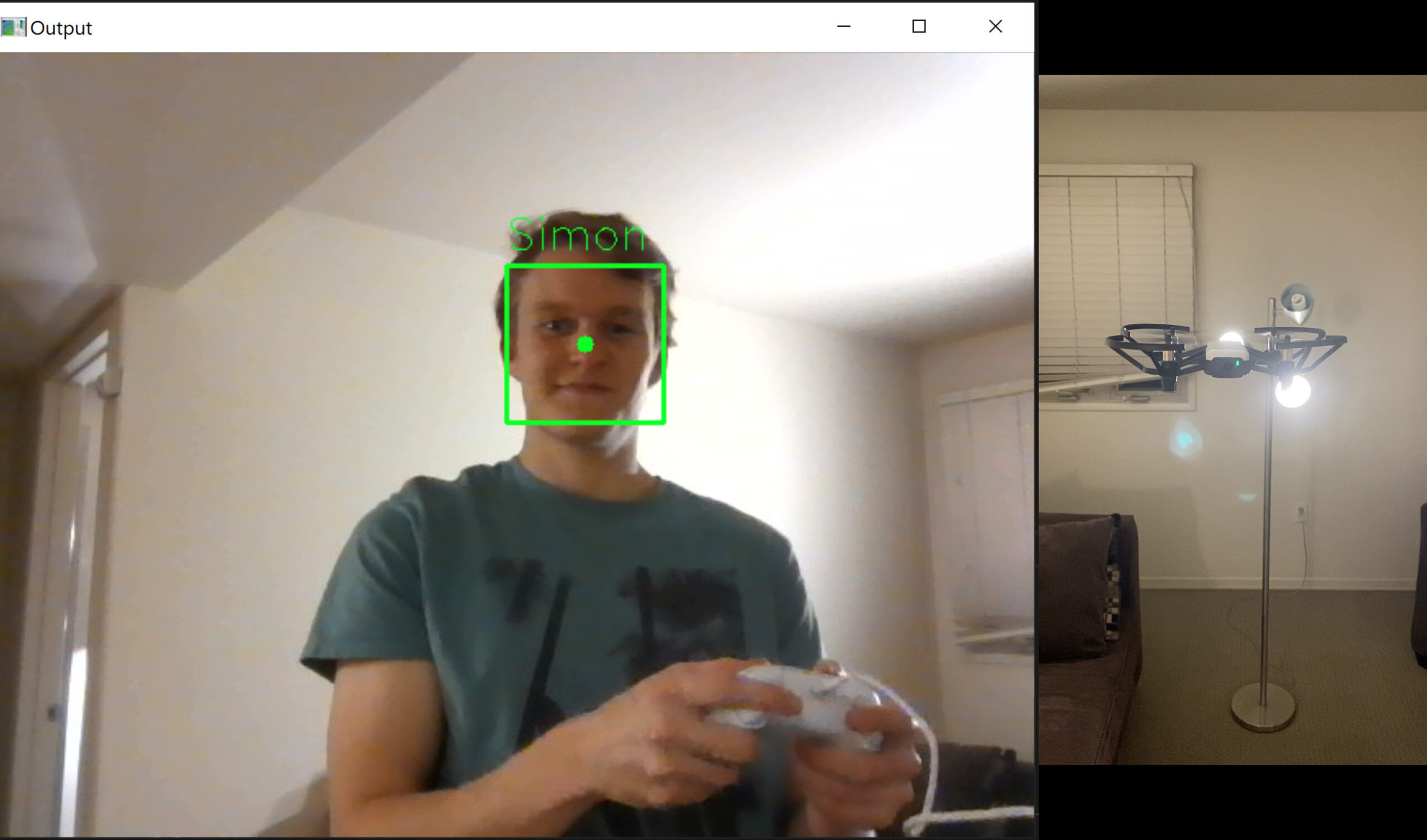

DJI Tello Drone Control and Facial Recognition

- I configured a DJI drone using python to be fully controllable from and interface with an Xbox controller via bluetooth. I programmed the drone to perform mid air take-offs, smart base detection return and landing, flips in air and have controllable speed and directional movement, execute pitch and yaw rotations and take a pictures and videos upon controller command inputs. The drone to was also trained on convolutional neural networks utilizing pythons opencv library to recognize and autonomously follow my face upon command. Since the drone processor is prone to overheating, the processing is offloaded to be completed remotely and PID algorithms are used to deploy and have the drone follow using a rasberry pi. The drone also was programmed to to use computer vision libraries to create locational mapping features and be able to fly to locations specified upon command.

UCLA School Projects

Master Degree: Electrical and Computer Engineering

Focus: Embedded Systems and Machine Learning

UCLA Projects

Tele-Operation ML User Character Prediction Algorithm

- I programmed an interface where motion from an operator and a "remote" programmed robot can be streamlined to simulate and predict teleoperation environments. This interface was designed to perform training on individual user movements and in periods of "operation" implement an inverse reinforcement learning algorithm to continue moving the robot when a induced signal dropout/disconnection occurs. Depicted is the training on and implementation of the character/movement 'a' with dropout. When an induced disconnect occurs (disconnect depicted with pink lines) the user (left environment) continues to move but the robot (right environment) uses the implemented ML policy to continue the move trying to replicate movement based on its training. The pink lines therefore emphasizes the difference between user and robot movement when no connection is present and black when the robot and user are in sync. This design managed to be able to distinguish between 10 varied types of movement/characters with with a 87% visually approved success rate.

Oculus Virtual Reality Smart Device Controller

- I developed a VR application which allows users to control real smart devices remotely in virtual environments. This design was motivated to address the increasing number of smart devices being introduced in the market and the increased demand for intuitive control of complex devices to enable remote work. The application allows users to control devices in similar fashions as if they were present in the real environment, but from anywhere. To do this I implemented a cloud TCP server in C++ and a local embedded oculus TCP client which could connect to and control smart devices. For the classroom demonstration, a smart light was used. I generated an interactive VR application in Unity which was meant to simulate a home and classroom environment which the user could teleport to and from and understand the potential use cases for being able to control devices remotely in VR. I leveraged an iphone lidar scan of a real classroom and brought it into the application proving how easily a real world environment could be replicated and brought into VR. Though this application was only programmed to control a light, it demonstrated the ability to intuitively manage many smart devices remotely. I designed hand interaction recognition in the VR environment, demonstrating that even more advanced interactions can occur in VR than with devices than available in the real-world. This application also demonstrated the use for intuitive feedback animations to users and 3d spatial organization/representation unlike available smart devices today.

-

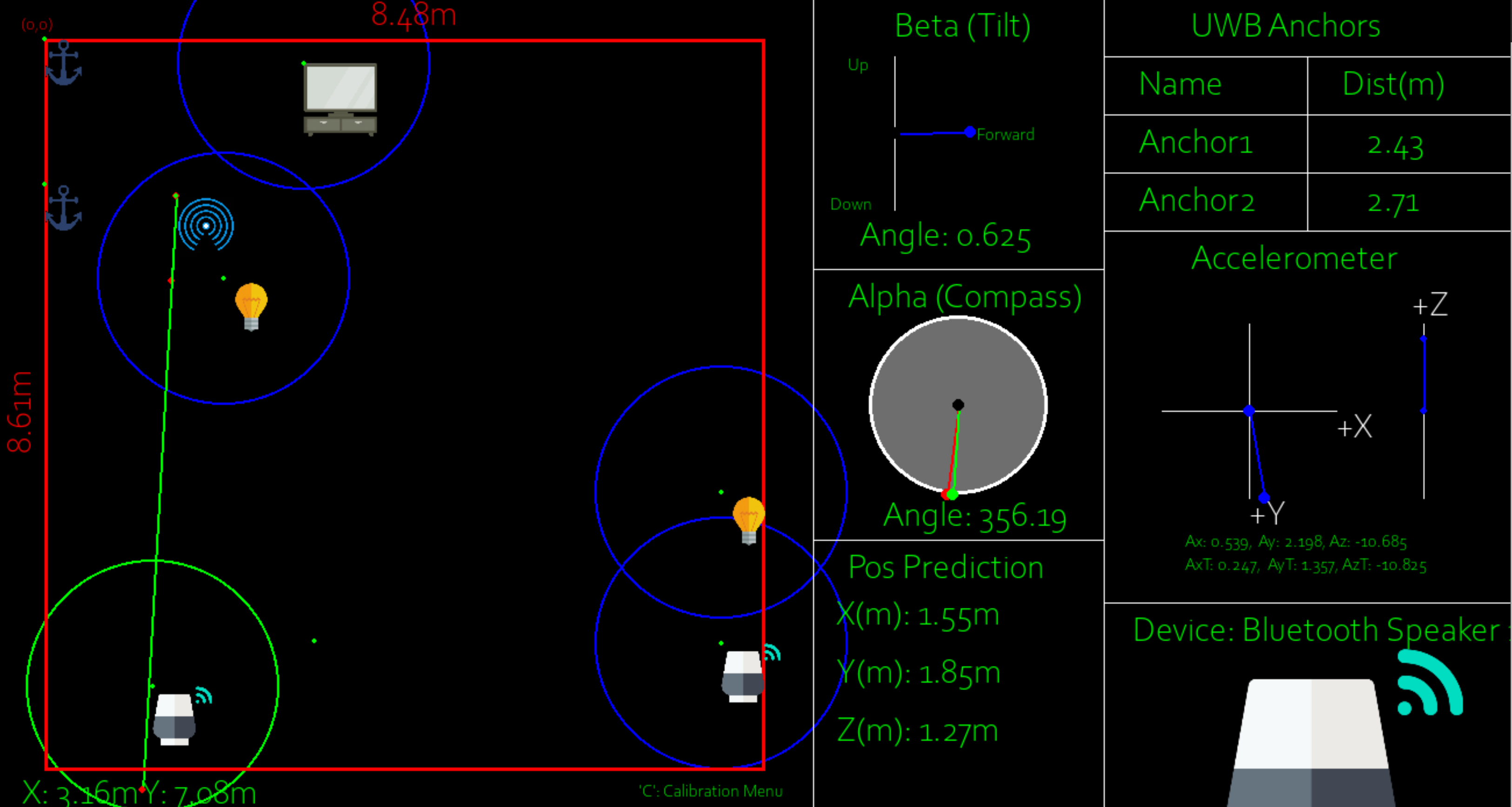

As the number of smart devices in a household continues to grow, there is need for a system to be able to distinguish between and control the devices. The goal of this project was to develop a system building off of sensors available in current smartphones to allow a user to easily manage and interact with multiple smart devices indoors. Current smartphones are equipped with both IMU and UWB sensors. By fusing these sensors measurements and introducing two UWB anchors, orientation and pose estimations can be calculated from a users controller or phone. With known positions of the devices in a room, the design can detect which device a user is pointing at to be controlled in a room and render it in a custom GUI. After this selection is made the user can control the smart device with stimulus broadcasted over BLE. For full project details and video demonstration visit

Report Website:

- https://simonschirber.github.io/UWBWebsite/report

- This project uses computer vision to monitor and publish a users quantity of bicep curl and the weight used to curl into an intuitive GUI. The hardware contains a camera which records, processes, tracks and graphs users repetitions over time. Users can set personal goals and use the trained computer vision to assign hand gestures to control their Spotify playlist while facing the camera. This computer vision implementation was built using edge impulse using MobileNetV2 FOMO 0.35 convolutional neural network and implemented in Micropython on an Arduino Nicla Sense board. The model reached accuracies of 82% with two users and requires only 200kB of RAM while maintaining 8 fps of processing power.

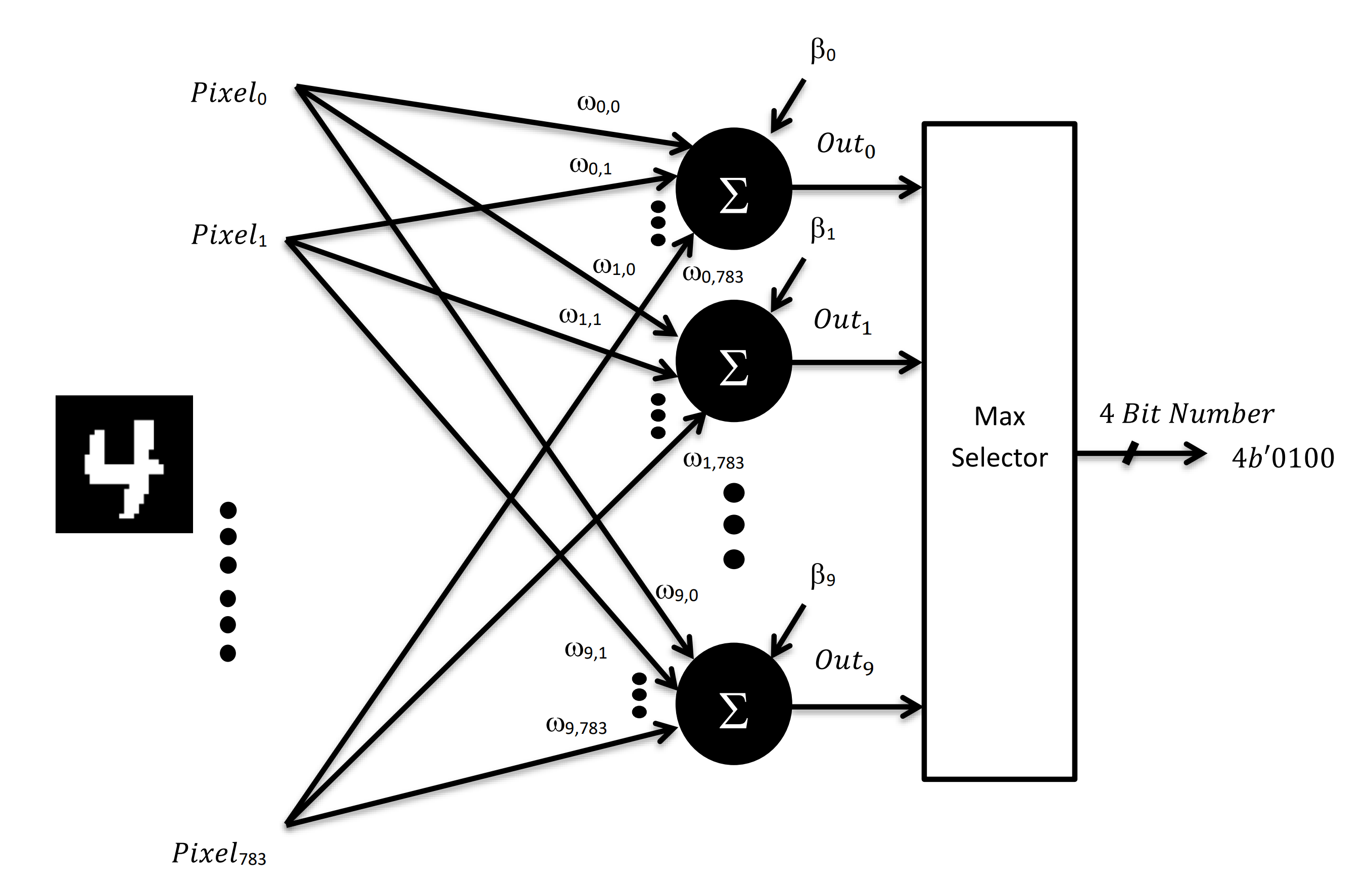

Hand Written Number Classification Accelerator Hardware

- For class I implemented a pixel-based neural network ASIC classifier in Verilog to distinguish handwritten numbers zero through nine . The goal was to compete with classmates to produce the best energy area product accelerator design while maintaining over 82% accuracy in number classification and high throughput/low latency. For this project we were given test samples of numbers from the MNIST library and then had to run our devices on randomly selected samples for the final test/grading. To accomplish this, I compiled a highly parallelized computational architecture, utilizing data based frequency scaling, and a data-driven selective pixel filtering to reduce unnecessary MAC units, resulting in decreased power consumption and area of the design. I tested my device accuracy with my own constructed test benches and simulated the power/area/energy product using Cadence synopsys simulation. On test day, my design placed first in the class for designing the lowest area-energy product design and maintained a 88% classification accuracy.

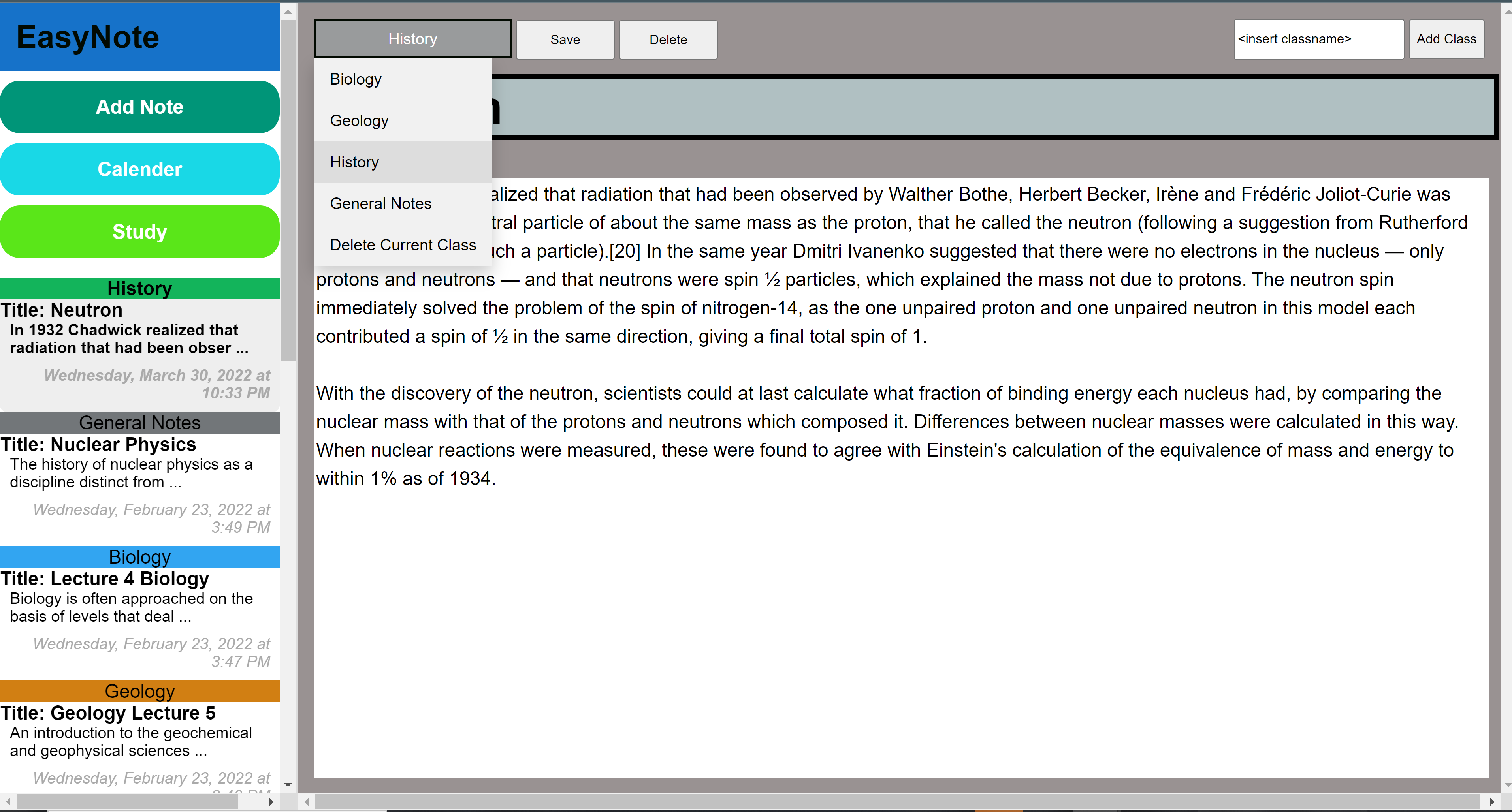

Note App and Study Recommendation Tool

-

I designed a note taking app to facilitate successful studying habits for students which was compiled in javascript, HTML, and CSS. To accomplish this I used used ML database to parse users notes and provide key study word recommendations for students based on their own notes and lecturer notes that could be uploaded. I then developed an algorithm to recommend notes for the user to study based on note word difficulty, student reported understanding, and student ranked importance of notes derived from daily polls the note app would give. I streamlined the app to automatically track user studying time for each class and gave daily class study time recommendations. I designed the app to continuously provide updated progress rings to incline users to fulfill daily study requirements through gamification. The app was also built to have general note taking capabilities such a creating/deleting notes, adding/deleting classes for the notes, sorting by notes by class, allowing note sharing with friends, recommending key words to study based on notes, and implementing a calendar feature which could sync with google calendar.

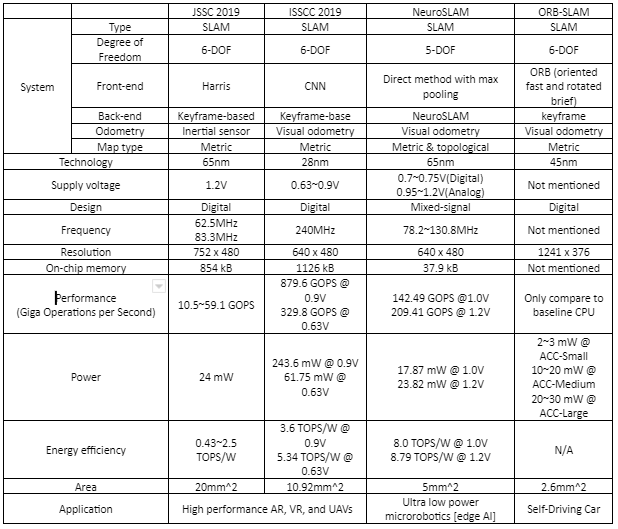

- The application of computer-vision based devices can be seen in everything from warehouse robots to autonomous driving vehicles. The increasing application of simultaneous localization and mapping (SLAM) algorithms has created demand for accurate, low-cost, low power, real time processing hardware. I evaluated four top tier SLAM accelerators and compared their functionality, architecture, and application. Each ASIC accelerator was designed to fulfill niche requirements for the specific SLAM algorithm. These algorithms lead to decisions in silicon architecture to optimize tradeoffs between latency, memory and area use, parallelization, computational capabilities, and energy consumption. Because of these and other factors, it was hard to make make direct comparisons between the accelerators and decide which was the best executed. The most promising of the SLAM algorithms implemented in hardware appeared to be the CNN SLAM algorithm architecture due to its promising conclusion to be able to scale to more complex systems such as autonomous driving by demonstrating its capability to perform low latency decision processing in high resolution. The CNN SLAM algorithms accelerator was able to achieve this result by demonstrating high levels of silicon parallelism and utilization. The CNN accelerator also however required magnitudes higher of both power and memory consumption requirements compared to the other accelerators thus making it a limiting factor for some applications.

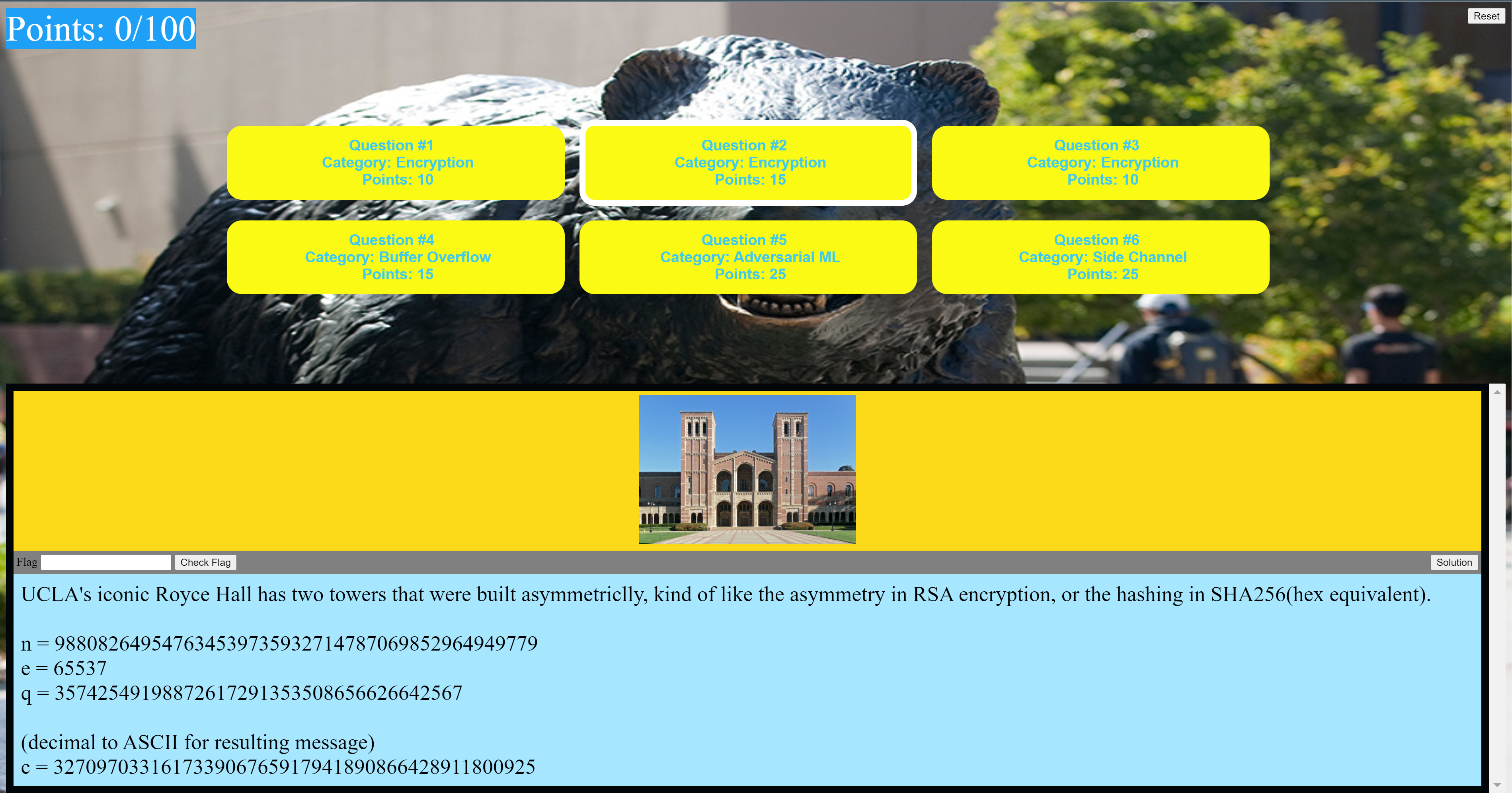

- I designed interactive cybersecurity CTF website for a cybersecurity class to teach future students cybersecurity concepts and help me study complex security concepts for the course. The concepts covered include encryption mechanisms, side channel attacks, buffer overflow attacks, and adversarial machine learning models. The website can be found at the link found here: https://simonschirber.github.io/

University of Wisconsin School Projects

Bachelors Degree: Biomedical/Electronic Engineering

Focus: Electrical Instrumentation, Minor in Physics

UW Projects

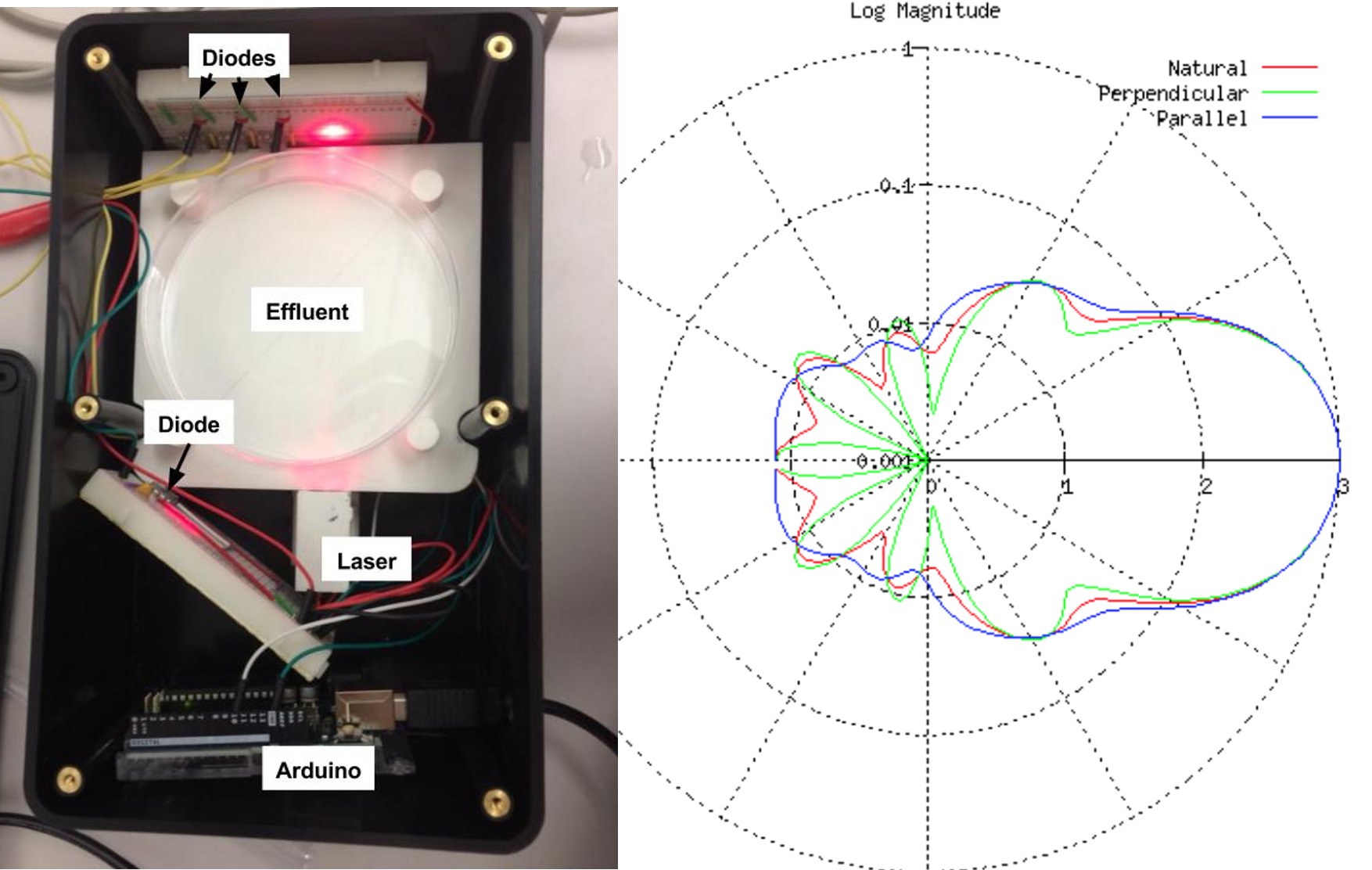

Laser Diode Neutrophil Counter Prototype

- Peritoneal dialysis is a common treatment used to remove waste products from individuals suffering from end stage renal disease. The treatment is available at home to increase the quality of life for people suffering with the disease, however the current standard method for detecting fungal infections is visual inspection by patients for cloudy waste indicating neutrophil presence and thus infection. This method has proven to be inconsistent and ineffective. Visual detection of cloudy product by patients can often occur in late stages of infection, which has resulted significant cases of patient death from undetected and untreated infection. The goal of this project was to devise a device that could aide in automatic at home detection. For this project I led development on taking a light based approach to count neutrophil concentrations using raleigh light scattering/deflection patterns. The prototype was developed to use laser lights built with a specific optimized frequencies and with sensitive sensor diodes placed at angles and locations which could optimally detect the presence and concentration of neutrophils at desired concentrations. The product is now pending patent approval and was passed on to other students to be further developed.

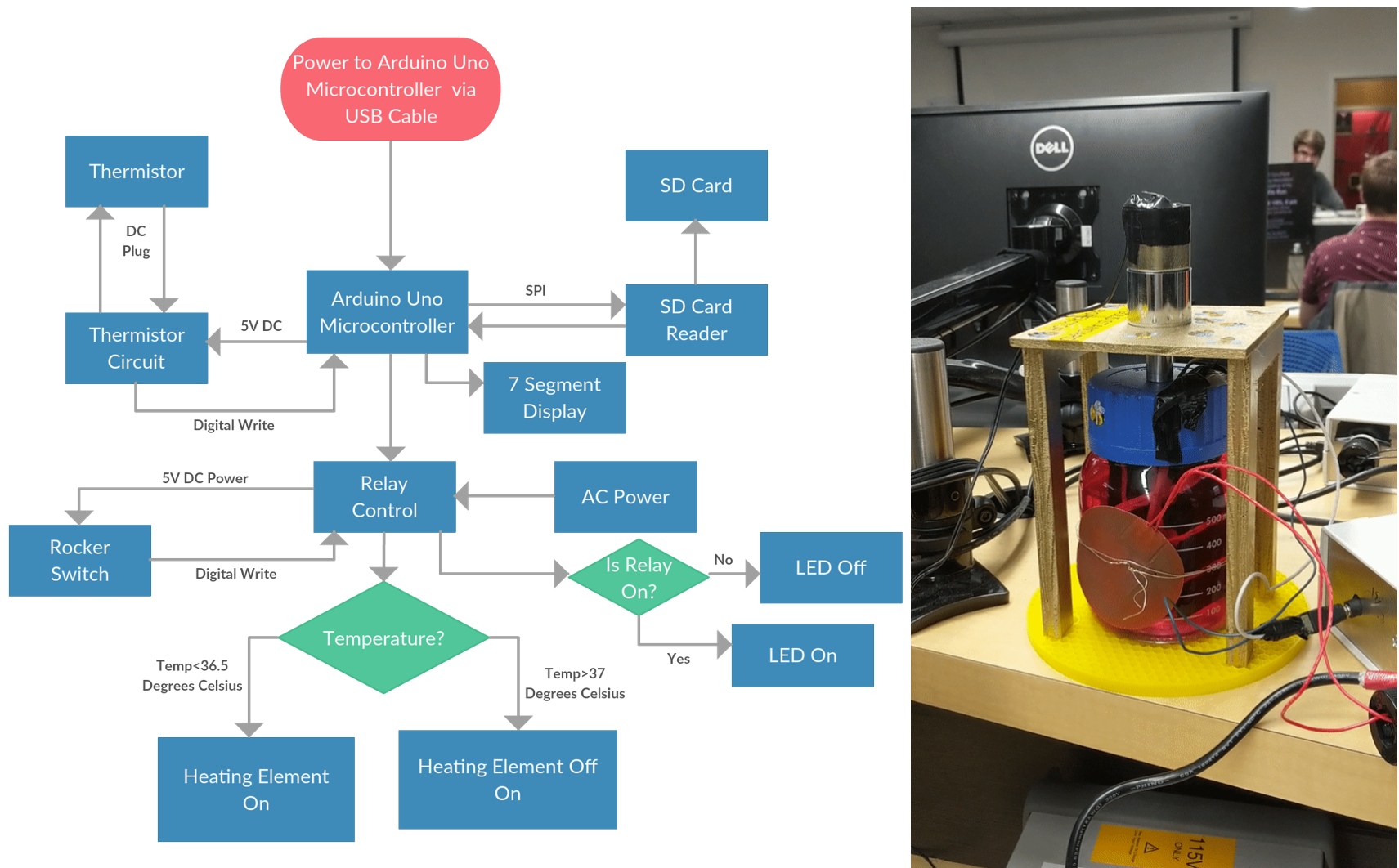

Bone Graft Bioreactor Controls System

- For this design, me and my team were challenged with building a synthetic bone graft bioreactor. The bioreactor had to keep chosen bone graft samples in a desirable and consistent environment by maintaining temperature and pH, all for a cost of under 100$. Each team member was responsible for different roles in the bioreactor design, by I was tasked with controlling the electronics controls and feedback systems. The electronics system block diagram I designed consisted of a wheatstone bridge feeding into a differential amplifier to control when the heating element was turned on, control power to the motor to spin the grafts to maintain pH consistency, and an SD card which logged pH and temperature from sensors located in the bioreactor. This was the first physical electronic control systems I had designed, and our team received the highest grade in the class for our overall design and ability to maintain temperature and pH of the bone grafts.

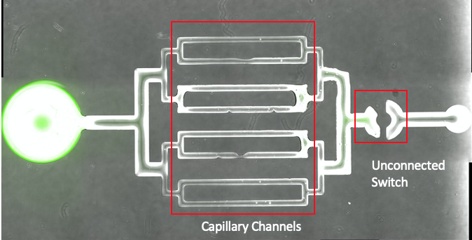

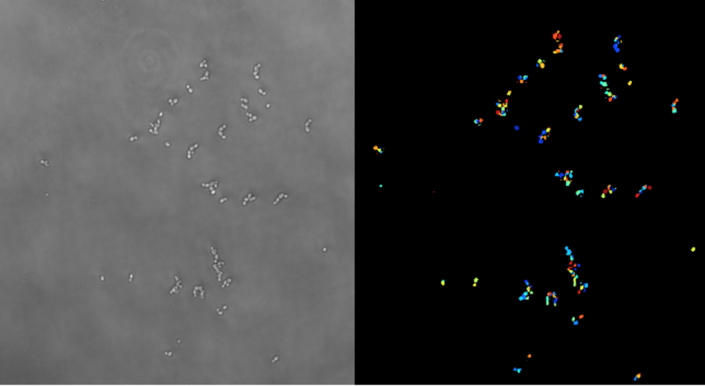

Biofilm Quantification Platform

- The dispersion of cells from biofilms allows fungi to spread in an infectious manner. This process of biofilm dispersion is relatively unknown and understudied to due its difficulty to replicate in vivo conditions. For this design project, I worked as a team leader to manage a collaboration with a novel microfluidic platform. The platform was made from etching silicon wafers using photolithography to make microscale fluidic underoil platforms which could be strategically controlled to research infection via biofilm. The lab that I managed collaboration with was asking to automate the process to be able to perform research at large scale which they were having problems executing. After evaluating the existing design, I realized on of the biggest bottlenecks in the design was their quantification process. Initially, to quantify the cells they had to take images and count the cells that made it to the end of the channels at the end of each experiment. This process was time consuming for researchers, prone to human error, and gave no information about the flow rate or timing of dispersion of biofilm cells. To improve this I designed camera setup which could monitored all capillary channels of the channels and counted both the number and speed of the biofilm cells at every point in time and saved the information for later further analysis using python. This automation process saved hours of quantification time needed by researchers and allowed for a more detailed analysis of spatial-temporal profiles of the biofilm. Due to its success and contributions, the design won the first place Design Excellence Award.